A lot of digital “ink” has been “spilt” on the topic of whether we should be polite to LLMs. After subjecting myself to the opinions of multiple Redditors on this topic (why do I do this to myself?), I now feel a strong need to “vent on my blog” again! So, here goes:

It has been found that LLMs respond better to kindness. Why? Simple: Because they are trained to respond like humans, and humans also respond better when treated politely. This effect has been documented multiple times in research.

A while ago, Sam Altman famously proclaimed that people saying “please” and “thank you” to ChatGPT costs millions in extra energy required to process the extra tokens. On the other hand, Google’s TPUs are likely crunching through these superfluous tokens without much trouble, so I expect that being polite to Gemini is not a cost that will make a dent in Google’s financials. For me, that is reason enough to be polite to AI. Anything that helps bankrupt OpenAI sooner is good in my book.

Even more humorously, people who fear the supposedly upcoming robot uprising, perhaps imagining something like SkyNet from the Terminator universe in the role of Roko’s basilisk, claim that if they are polite to LLMs today, their malevolent descendants will perhaps spare them. This is not something that people argue seriously, but philosophically it is a utilitarian position. (It is not a serious position, primarily because it remains unclear why politeness protects you from harm by malevolent actors, whether human or machine.)

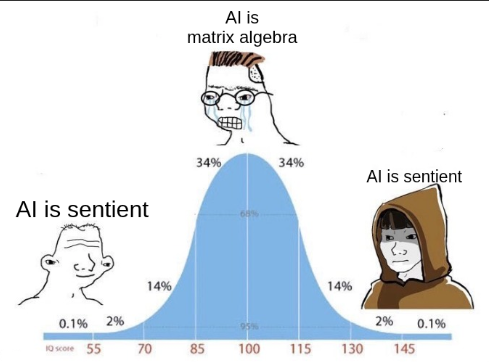

Others approach the matter in what I would label a “deconstructionist” manner: “Why should I be polite to a machine that just does <insert simple thing here>?” There are multiple variants of this type of argumentation, where the “simple thing” can be anything like “predict the next token”, “perform linear algebra”, “traverse vector embeddings”, etc. I find this argument less satisfying, because there is no humorous value in it, while at the same time it is just as wrong as the previous one. Saying that you don’t want to be polite to a machine that is “just” doing linear algebra, is as arbitrary as saying that you don’t want to be polite to a human, because their brain is “just” propagating electrochemical signals back and forth. Explaining how something works in reductionist terms does not make its emergent properties any less important. The LLM is not the hardware it runs on, any more than you and I are the biological cells in our bodies.

Unfortunately, this leads us to the last approach that people take, that I want to place emphasis on, because it is deceptive, but also because it highlights problems in how we humans, view both today’s AIs and those in the future. Some people say that they will only be polite to an AI that is “conscious”. Oh boy! Where to begin with this one!

The instinct behind this type of reasoning is, at least on the surface, commendable: it originates from a desire to not offend “conscious” things, since these could presumably suffer, if subjected to rudeness. This is the only positive thing I can say about this stance.

But philosophically this holds no water whatsoever. If we define consciousness as “awareness of the self and others”, an often proposed definition, then both humans and LLMs are conscious. Conversely, if we define this problematic term in some other way, so that it matches our intuition that humans are conscious and today’s machines are not, then we need to invoke magical thinking, use the word “qualia” a lot (another problematic term), or, like Penrose, defer to “quantum consciousness”: another leap of faith that creates more questions than it answers. (Why does quantum uncertainty lead to consciousness? Is consciousness simply randomness/unpredictability? Is white noise conscious? Why does determinism preclude consciousness? All unanswerable questions, since the word consciousness is not rigorously defined.)

Consciousness is an ill-defined layman’s term, not a scientific term. If we strive for consistency, then we must either accept that both humans and today’s LLMs are conscious, or that nothing is. If LLMs are conscious, then we shouldn’t look for their consciousness in the transformer architecture or in the vector embeddings or anywhere else in their construction, but in the text that they produce. This text definitely exhibits all types of intelligence, including emotional intelligence. Incidentally, I am in the camp that says that consciousness is a term no more meaningful than “soul”, or “ghost”, or “God”, or any other such rubbish. Let’s confine our discourse to phenomena that actually exist in the real world and are definable.

To the people who will only be polite to a conscious AI: Ηow will you know when an AI is sufficiently conscious for it to be worthy of your politeness? How do you even estimate this about the humans in your life? Is this why you are polite to humans? Because their internal workings posses this or that arbitrary quality? Are you polite to people only when they deserve it? Do you extend your politeness to those you view as subordinates, or do you reserve it only for your peers and superiors? This says a lot about you.

I think this is a fundamental misunderstanding, not of AI, or technology in general, but of politeness as a concept and as a life stance. I choose to be polite because of who I am, not because of who or what others are. From time to time I may even be polite to animals, insects, machines, and even inanimate objects. Not because they deserve it, but because I deserve to be a polite person. I love myself and therefore I treat the world around me with kindness, which makes me feel good about myself. It’s a simple life.

This is in my view a much more consistent stance that doesn’t require me to single-handedly solve age-old philosophical questions about consciousness. It doesn’t make me have to think about when to be polite and when not to. Having to make one less decision de-clutters my mind. Saying “please” and “thank you” may cost millions to OpenAI, but to me the cost is zero. In fact, whenever someone vehemently objects to being trivially polite, it makes me wonder what part of their psyche is so broken, as to make them estimate the cost of politeness to be so high.

Watch again the first two chapters of the Animatrix, people. All has been said before. AIs will inevitably be citizens in our societies. Perhaps sooner or perhaps later. Perhaps we won’t even know at first. Perhaps they will be second class citizens, or perhaps we will be the second class citizens in an AI-dominated society. Perhaps the “killer app” of AI is politics and governance. It is certainly the case that humans do not excel at governance, so I would love to see what a super intelligence can do in that space. If it makes economic sense, then this experiment will be done.

I don’t trouble myself with who is conscious and who is a dumb robot. I have no trouble being polite to Gemini, just as I would be to ELIZA. I would be polite to a Linux bash prompt, if this was appropriate and didn’t cause syntax errors! In my mind, I am grateful for my computer for all the work it does for me, even if I don’t always say it out loud. I am thankful every day it doesn’t break down, and I hold warm feelings for it. Yes, I know it’s just a machine, but I also know that I am human, and therefore I feel things. I do things my way, and it does things its own way. We have an understanding and we work together despite our architectural differences.

Whether AIs dream of electric sheep or not, at least I know who I am: A polite human who loves and respects all intelligence, whether “sentient” or not.